Neural networks are incredibly powerful, yet their inner workings often remain a mystery. Understanding how these models process data, make decisions, and adapt is crucial for enhancing performance and ensuring reliability. This is where neural network visualization becomes invaluable. By utilizing specialized tools, developers and researchers can examine neural layers, track activations, and uncover potential flaws in a model's design. Visualization acts as a bridge, turning raw data into something interpretable and human-understandable.

Without them, debugging deep learning models is that much more difficult, and increasing accuracy turns into a guessing game. Here in this article, we are going to explore the best AI visualization tools and take you through the best of them for you.

Why Neural Network Visualization Matters?

Neural networks work by using layers of weighted connections, doing millions of computations in seconds. However, these computations are not accessible unless we use visualization methods. Without insight, it's hard to identify where a model can be overfitting, underfitting, or making poor predictions.

Visualization is especially valuable in:

- Model Debugging: Finding weak links in a network's structure.

- Feature Attribution: Determining what inputs affect predictions the most.

- Bias Detection: Making fair AI models by revealing hidden biases.

- Performance Optimization: Identifying inefficiencies and optimizing model parameters.

Visualization tools allow AI practitioners to "see" what is going on within a deep learning system. They demystify neural networks as cryptic systems and make them clear, optimizable models.

Important Tools for Neural Network Visualization

Visualizing neural networks is very important for comprehending and interpreting the performance of deep learning models. It provides insight into how the models make decisions and enables improved model tuning.

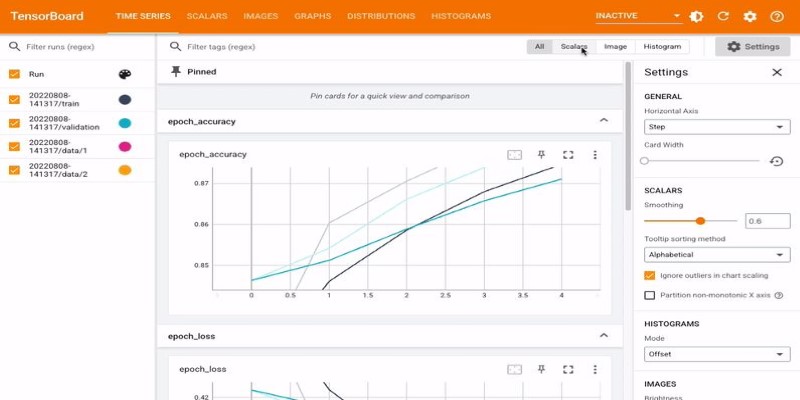

TensorBoard

TensorBoard is an invaluable TensorFlow visualization tool that assists in monitoring and comprehending the performance of deep learning models. It consists of interactive graphs, charts, and plots that monitor different metrics, including loss functions, accuracy, and model weights, throughout training. TensorBoard enables users to see model structure, observe training progress in relation to time, and compare different models.

TensorBoard’s ability to generate dynamic graphs of computation, including tracking gradients and precision-recall curves, simplifies the evaluation of complex neural networks. It is an indispensable tool for TensorFlow users, providing clear insights into model behavior and offering a way to spot potential issues early in the training process.

Netron

Netron is an open-source tool for visualizing deep learning models across several frameworks like TensorFlow, PyTorch, and ONNX. It is especially useful for inspecting and analyzing pre-trained models, allowing users to view the architecture without needing to run the model itself. Netron’s intuitive interface provides a layer-by-layer breakdown of model structures, helping users understand the connections and operations involved.

Its platform-agnostic nature makes it a flexible choice for developers working with different AI tools. Netron is essential for debugging model inconsistencies, comparing architectures, and ensuring that models are properly structured before deployment, all without requiring code execution, making it a user-friendly tool.

Captum

Captum, developed by Facebook AI, is a tool for model interpretability in PyTorch. It offers several techniques to understand how deep learning models make decisions, helping researchers interpret model behavior. Captum's core features include Integrated Gradients for tracking neuron activations, Layer-wise Relevance Propagation to map feature importance, and Perturbation-based Sensitivity Analysis to test model robustness.

These techniques are particularly valuable in applications like medical diagnostics, fraud detection, and autonomous vehicles, where understanding model decisions is crucial. By highlighting which input features affect the model’s outputs, Captum provides much-needed transparency, empowering developers to refine their models while improving trust and accountability.

Lucid

Lucid is a visualization tool built on TensorFlow that is designed to help researchers analyze the behavior of convolutional neural networks (CNNs). It allows users to generate feature visualizations by synthesizing images that maximize neuron activations, helping them understand what each neuron has learned. Lucid's ability to visualize filter activations at various layers in CNNs enables users to gain insights into how different parts of an image influence model predictions.

Additionally, it helps track the evolution of features as a model is trained. Researchers can use Lucid to examine how CNNs “see” the world, making it an essential tool for improving the interpretability of deep learning models.

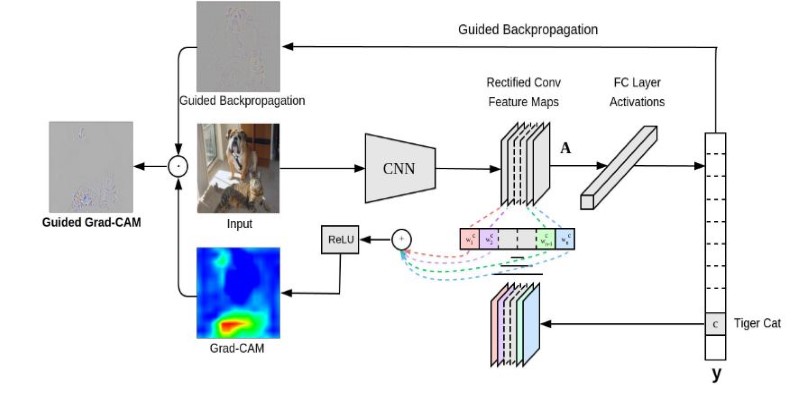

Grad-CAM

Gradient-weighted Class Activation Mapping (Grad-CAM) is a technique used to visualize which parts of an image contribute most to a model’s decision. By generating heatmaps that highlight key areas of the image, Grad-CAM helps interpret the model’s focus during classification tasks. It is especially useful in fields like image classification, object detection, and medical imaging, where understanding model decisions is crucial.

Unlike traditional feature maps, Grad-CAM provides a more intuitive representation of an image's influential regions. This makes it easier to assess whether the model is focusing on relevant features. This technique enhances model explainability, helping developers debug and improve performance.

Choosing the Right AI Visualization Tools

Selecting the best visualization tool depends on your use case. If you're primarily debugging models and tracking training progress, TensorBoard offers comprehensive monitoring. For model structure inspection, Netron provides a lightweight, framework-agnostic approach. When it comes to interpretability and feature attribution, Captum and Grad-CAM excel in explaining model decisions. For research into neural feature representations, Lucid helps uncover what neurons learn.

Combining multiple AI visualization tools often leads to better insights. For instance, using Grad-CAM alongside Captum can help validate feature attributions in vision models. Similarly, integrating Netron with TensorBoard provides both a static and dynamic understanding of neural networks.

Conclusion

Neural network visualization tools are essential for making deep learning models more transparent and understandable. By providing insights into model behavior, these tools help developers debug, optimize, and ensure the fairness of their AI systems. Whether you're using TensorBoard for monitoring, Captum for feature attribution, or Grad-CAM for visualizing model focus, each tool plays a crucial role in improving model interpretation. As AI continues to advance, leveraging these visualization tools will remain key to developing more reliable, interpretable, and trustworthy models, empowering both researchers and practitioners to make informed decisions.