In machine learning, an epoch represents a full training cycle in which a model processes the entire dataset once. This term is fundamental in deep learning and artificial intelligence, yet it often confuses beginners. The idea behind an epoch is simple: training a model isn't about looking at data just once; instead, it involves multiple passes over the same dataset to refine the model's ability to make predictions.

Each epoch adjusts the model's internal parameters, improving its accuracy over time. However, choosing the right number of epochs is crucial. There are too few, and the model remains undertrained. Too many, and it risks overfitting. Understanding epochs is essential for optimizing machine learning performance and ensuring models generalize well.

What Does an Epoch Mean in Machine Learning?

An epoch in machine learning is a full iteration of the training data through the model. Machine learning models do not learn all at once. They require multiple exposures to data to tune their parameters and reduce errors. In an epoch, the model iterates over every data point, adjusting its weights according to a loss function that quantifies its prediction errors.

Epoch is associated with two related terms—batch size and iteration. If a data set is large enough not to be held in memory, it is split into subgroups referred to as batches. Each batch is passed through the model individually. One single pass through all the batches is referred to as an epoch. The number of batches required to pass over the data set is determined as the number of iterations per epoch.

A single epoch is seldom sufficient for a model to learn patterns properly. Training includes several epochs so that the model can learn slowly and improve incrementally. The goal is to achieve a balance where the model learns well without memorizing training data, which may result in bad generalization of new inputs.

How Many Epochs Are Needed?

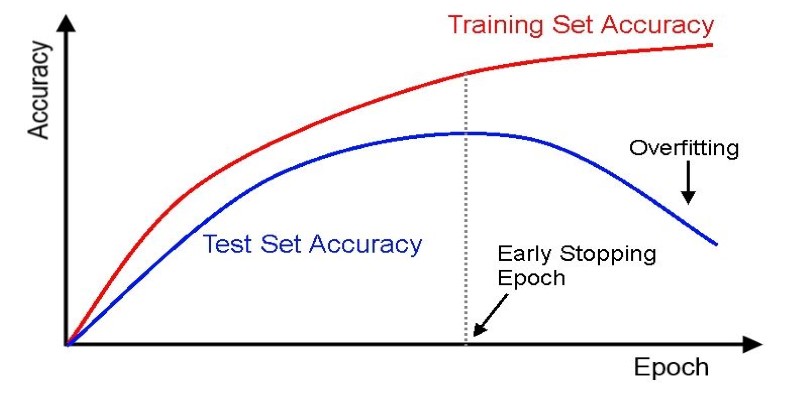

Deciding how many epochs to train a machine learning model is both an art and a science. Too few epochs may leave the model underfitted, meaning it has not learned enough to make accurate predictions. Too many epochs, on the other hand, may lead to overfitting, where the model becomes too specialized in the training data and struggles with new inputs.

The ideal number of epochs varies depending on the dataset, model complexity, and problem being solved. Common strategies to determine the optimal number include:

Early Stopping: This technique tracks validation performance and halts training when improvements stop. It prevents unnecessary epochs, reducing overfitting while optimizing computational efficiency for better generalization.

Cross-Validation: Splitting data into multiple subsets helps evaluate model performance across different portions, determining the optimal epoch count. This improves training reliability and reduces the risk of overfitting or underfitting.

Learning Curves: Accuracy or loss plotted over epochs reveals when performance stabilizes. Identifying this point helps avoid excessive training, ensuring an efficient model without unnecessary computational overhead.

Some deep learning models, especially those trained on large datasets, may require hundreds of epochs. However, modern optimizers and techniques, such as adaptive learning rates, can speed up training while maintaining efficiency.

The Impact of Epochs on Model Performance

Epochs play a crucial role in shaping a model’s accuracy and generalization. Each additional epoch allows the model to refine its internal parameters, improving prediction accuracy. However, more epochs do not always lead to better results. The challenge lies in finding the right number of training cycles to avoid underfitting or overfitting.

Underfitting occurs when training stops too early, preventing the model from learning essential patterns. With too few epochs, the model remains undertrained and struggles to make accurate predictions on both training and unseen data. Conversely, overfitting happens when training continues for too long, causing the model to memorize data instead of recognizing generalizable patterns. This results in poor real-world performance, where the model excels on training data but fails on new inputs.

The ideal number of epochs depends on the complexity of the model and the dataset. Simpler models with fewer parameters require fewer epochs, while deep learning models often need longer training cycles to extract meaningful patterns. Training should continue until the loss function stabilizes, signaling convergence. Once the model stops improving, additional epochs become unnecessary, wasting computational resources. Proper monitoring through validation techniques ensures optimal performance without excessive training.

Finding the Right Balance Between Efficiency and Accuracy

Training a machine learning model is a balancing act between efficiency and accuracy. Running too many epochs can be computationally expensive and time-consuming. More importantly, an excessively trained model may fail to generalize well to unseen data, which is the ultimate goal of machine learning.

A good strategy is to start with a reasonable number of epochs and monitor the model's learning curve. Tracking the loss function and validation accuracy makes determining the ideal stopping point easier. Automated techniques, such as adaptive learning rate schedules and regularization, can also improve model efficiency while maintaining accuracy.

In real-world scenarios, practitioners often experiment with different epoch values to optimize performance. Tools like TensorFlow and PyTorch provide features that make tracking and adjusting epochs easier, allowing for more flexible and efficient training processes.

Conclusion

Epochs are fundamental in machine learning, determining how many times a model processes the entire dataset during training. They influence accuracy, efficiency, and generalization. A single epoch is often insufficient, while excessive epochs risk overfitting, where the model memorizes rather than learns patterns. Techniques like early stopping and cross-validation help optimize the number of epochs, preventing wasted computation and poor real-world performance. The right balance ensures the model learns effectively without unnecessary complexity. Machine learning practitioners must monitor training progress to refine accuracy while avoiding inefficiency. Choosing the correct number of epochs is key to building reliable, well-generalized models for practical applications.