When first diving into the fascinating universe of artificial intelligence and deep learning, you’ll inevitably encounter various technical terms and complex functions that might initially seem intimidating. But rest assured, many of these concepts, including activation functions like Softmax, are simpler and more intuitive than they first appear. Think of activation functions as little decision-makers inside your neural network, determining how the model behaves and learns from the input data.

Among these decision-makers, the Softmax activation function stands out, especially in problems involving classification. Understanding Softmax not only illuminates neural network decisions but also makes you appreciate the simplicity behind some of AI's seemingly intricate processes.

Understanding Activation Functions

Before focusing specifically on Softmax, it's vital to grasp what activation functions do in general. At their heart, neural networks consist of layers stacked neatly on top of each other. Each layer processes information before passing it to the next. Here’s where activation functions come into play. They decide how much or how little the information from one neuron in a layer should influence the neurons in the following layer.

Imagine activation functions as volume knobs adjusting the intensity of signals moving across a network. Without activation functions, your neural network would behave more like a linear regression model—meaning it would lose its ability to handle the nuanced complexities of the real world. Activation functions ensure non-linearity, giving your network the crucial power to map and handle intricate data relationships.

Various activation functions exist—ReLU, Sigmoid, Tanh, and Softmax, each with its specific strength. While ReLU introduces non-linearity by simply filtering out negative values, Softmax is particularly handy when your neural network’s task involves distinguishing between multiple possible categories.

The Softmax Activation Function Explained

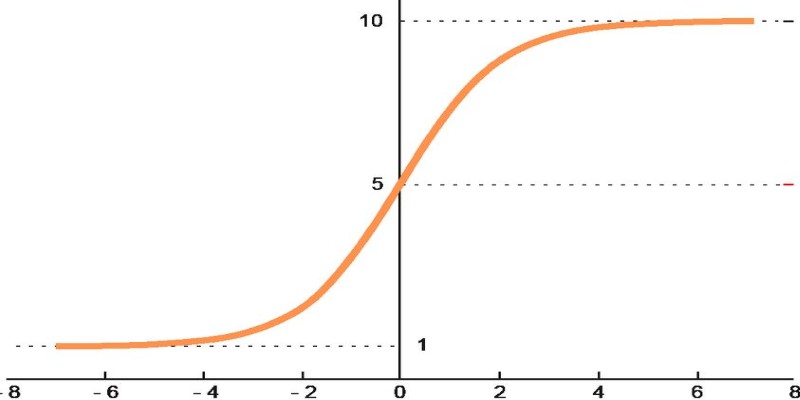

So, what exactly is the Softmax activation function? In simple terms, Softmax is a mathematical function that takes a group of numbers and converts them into probabilities. It scales these values between 0 and 1, allowing you to easily interpret the output as likelihoods that a given input belongs to different classes or categories. This scaling process ensures that the sum of the probabilities always equals one—a basic requirement for probability distributions.

To visualize this, consider a practical example. Imagine your neural network attempts to recognize animals in images—cats, dogs, or birds. After processing an image, the network might produce raw numerical outputs like [2.5, 1.0, 0.5]. These numbers alone don't offer a clear indication of certainty or doubt. Softmax steps in, converting these ambiguous numbers into understandable probabilities like [0.75, 0.18, 0.07], clearly illustrating that the model is 75% confident it sees a cat, significantly less confident about a dog, and doubtful about it being a bird.

Mathematically, Softmax achieves this transformation through exponentiation followed by normalization. It takes each numerical output, exponentiates it, and then divides it by the sum of exponentiated values. This elegantly straightforward process not only converts raw outputs into probabilities but also emphasizes higher numbers while suppressing significantly lower values.

Why Softmax Matters in Neural Networks?

Understanding Softmax's practical significance involves investigating classification tasks deeply embedded in real-world applications. When a neural network is used for tasks that require categorizing or identifying classes, such as image recognition, sentiment analysis, or even predicting disease probabilities, Softmax becomes a natural and crucial choice.

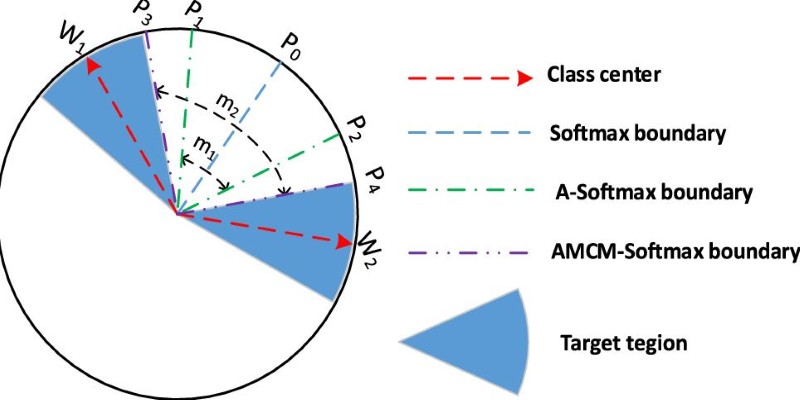

Why exactly? Well, Softmax doesn't just generate probabilities; it also naturally accommodates scenarios involving multiple classes. Unlike binary classification tasks that use Sigmoid (a close cousin focusing on two-class decisions), Softmax extends effortlessly to multi-class problems. Therefore, its seamless integration into output layers makes it uniquely suitable for tasks ranging from recognizing handwritten digits (think MNIST) to advanced natural language processing problems.

Moreover, Softmax supports a process known as cross-entropy loss, a popular method in training classification neural networks. The probabilities derived from Softmax neatly complement cross-entropy loss, guiding your neural network to understand exactly how off it was in its predictions and learn efficiently from its mistakes.

However, Softmax does come with minor caveats. One essential thing to remember is that Softmax presupposes mutual exclusivity among classes. Simply put, the network assumes each input belongs to exactly one category. In scenarios where an input might belong simultaneously to multiple classes, other activation functions or customized approaches might be more appropriate.

Avoiding Common Mistakes with Softmax

Like any powerful tool, understanding how and when to apply the Softmax activation function can save you from common mistakes. A frequent issue beginners face with Softmax is numerical instability, particularly due to the exponentiation of very large numbers. This can cause computational overflow errors, disrupting accurate calculations and model performance. Luckily, addressing this concern is straightforward. By subtracting the largest input value from all the inputs before applying exponentiation, you significantly enhance numerical stability without affecting the relative ordering of outcomes.

Furthermore, it’s important to realize Softmax isn’t ideal for every situation. Specifically, Softmax is best suited for classification-oriented problems—tasks where inputs must clearly fall into distinct categories. If your task involves regression, which entails predicting continuous values such as stock prices, weather temperatures, or house market valuations, Softmax isn't appropriate. For these scenarios, activation functions such as ReLU or linear functions typically serve much better, offering precise and meaningful results without forcing outputs into a probability distribution format.

Concluding

Understanding the Softmax activation function provides an insightful gateway into the mechanics of neural networks. It translates raw computational outputs into meaningful probabilities, simplifying complex classification tasks and enhancing model interpretability. By scaling outputs between zero and one, Softmax elegantly supports multi-class problems, making it invaluable for image recognition, language processing, and beyond. Embracing this fundamental tool underscores the simplicity underlying neural network complexities. As you delve deeper into artificial intelligence, appreciate how clear understanding transforms challenging concepts into approachable knowledge. The Softmax function exemplifies this perfectly—showing us that even intricate algorithms ultimately rest upon intuitive, straightforward ideas.